Google DeepMind has officially released SIMA 2, the next generation of its Scalable Instructable Multiworld Agent.

It aims to play 3D open-world games, and efficiently performs tasks effectively and continues to enhance by learning from its own experiences.

The recently introduced SIMA 2 can interpret visual input from a frame and combine it with human-issued goals such as “build a shelter” or “find the red house.”

The agent implements actions using keyboards- and mouse-style controls.

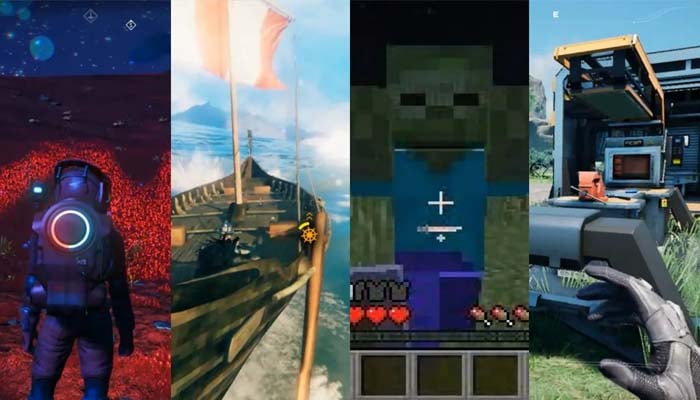

With this significant launch, it can adapt to a range of new games it hasn’t been trained on.

In tests with unfamiliar environments such as Minedojo and the Viking survival game ASKA, SIMA 2 achieved enhanced outcomes in contrast to the first version.

Additionally, the system supports multimodal prompts—sketches, emojis, and multiple languages, and it is able to transfer concepts between games.

For example, after learning “mining” in one game, it can apply similar knowledge to “harvesting” in another.

It is important to note that SIMA 2 is trained using human-demonstration data and auto-generated annotations from Gemini.

New skills learned in unfamiliar environments are added into its training dataset, minimising reliance on human labeling.

Despite its gaming abilities, SIMA 2 is not particularly designed to be a gaming agent. DeepMind considers it as a foundation for future real-world robots capable of following natural-language instructions and performing several tasks.